Most AI coding assistants break down in real codebases.

If you've tried using AI coding assistants on anything larger than a toy project, you've probably hit the same wall we did: they work great for isolated functions but fall apart when they need to understand how your actual codebase fits together.

The problem isn't the underlying AI models; it's context. When an AI assistant only sees a few lines around your cursor, it can't understand the broader architecture, existing patterns, or how changes in one file might affect dozens of others. You end up with suggestions that look reasonable in isolation but break when integrated into your real system.

After watching developers struggle with this limitation across codebases ranging from startup MVPs to enterprise monorepos with 100k+ files, we built Augment Agent to solve the context problem from the ground up.

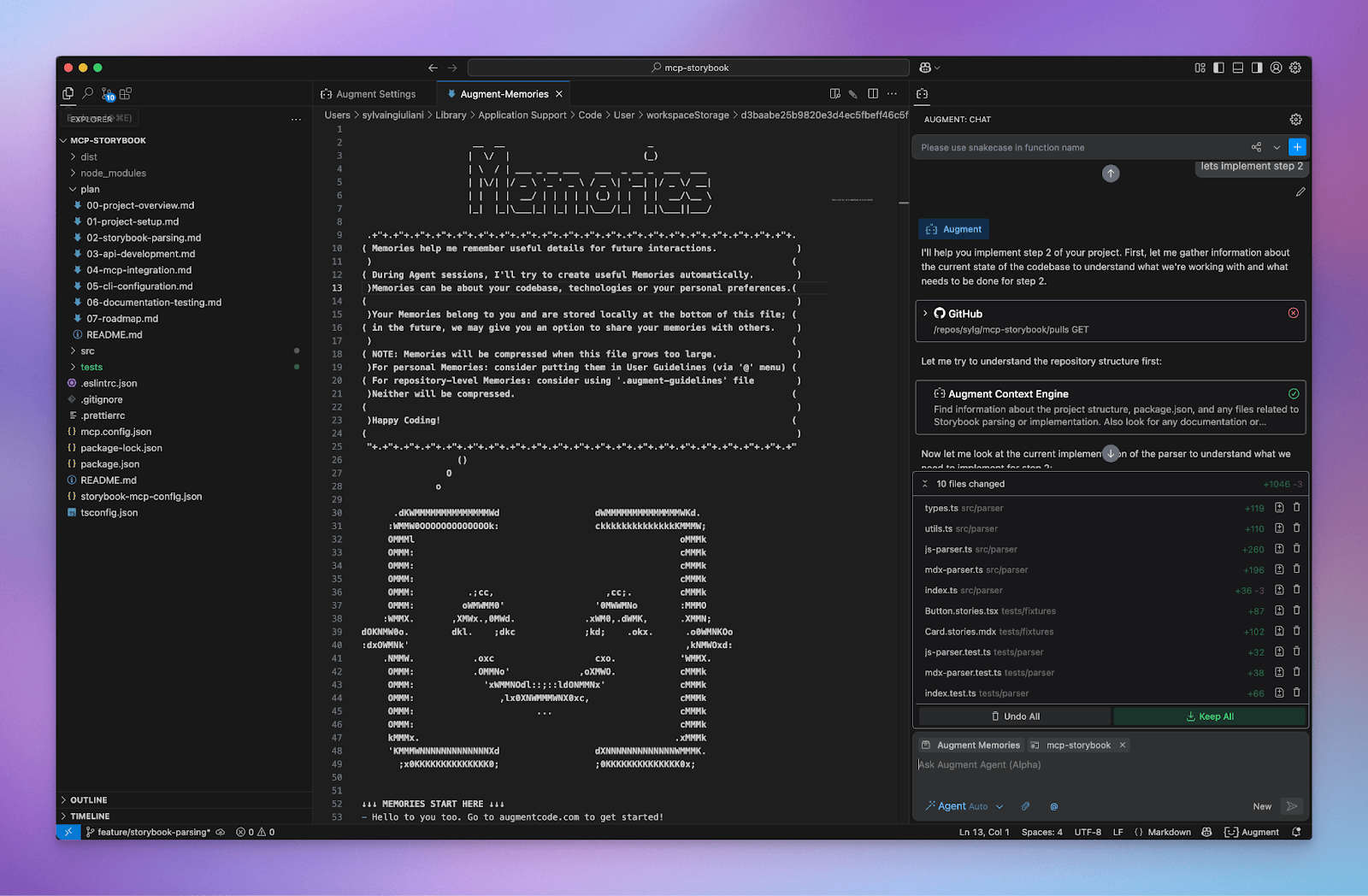

Augment Agent can automate tasks and parallelize work in repos. In this demo, the Agent is asked to implement a new feature in a monorepo while the developer researches a related issue. Actually modifying the code manually becomes less and less necessary.

The context capacity problem

Other AI coding tools are limited by their context windows,typically 8K to 32K tokens. That sounds like a lot until you realize it's maybe 20-30 files worth of code. In a real codebase, understanding how to implement a feature often requires context from:

- The existing API patterns and data models

- How similar features were implemented elsewhere

- The testing patterns and infrastructure setup

- Configuration files and environment variables

- Documentation and architectural decisions

Context Engine: Beyond simple file search

Our Context Engine doesn't just search for keywords, it understands semantic relationships between code concepts. When you ask it to implement a new API endpoint, it automatically pulls in:

The engine maintains a real-time index of your codebase, tracking not just what code exists but how different pieces relate to each other. This means when you're working on authentication, it knows to surface your existing auth patterns, middleware, and related tests, even if they're scattered across dozens of files.

Memories: Learning your patterns over time

Beyond just understanding your current codebase, Augment Agent learns and remembers your coding patterns, preferences, and project-specific conventions. These Memories persist across conversations and continuously improve the quality of generated code.

The Agent learns this pattern and automatically applies it to new code, maintaining consistency across your entire codebase without you having to specify it every time.

Native tool integrations

Most AI assistants live in isolation within your code editor. But real development work spans multiple tools, including GitHub for code review, Linear for issue tracking, Notion for documentation, Slack for team communication.

We built native integrations for the tools developers actually use:

- GitHub: Understands your PR patterns, issue history, and repository structure

- Linear: Connects tasks to code changes and understands project context

- Notion: Pulls in relevant documentation and architectural decisions

- Jira: Links development work to business requirements

- Confluence: Accesses team knowledge and process documentation

These aren't just API connections,they're deep integrations that understand the relationships between your code and your broader development workflow.

Model Context Protocol

We've fully embraced the Model Context Protocol (MCP), an emerging standard for connecting AI systems to external tools and data sources. This means Augment Agent can connect to virtually any system in your development stack.

Early adopters are already using MCP connections to:

- Debug production issues by connecting to monitoring tools like Datadog

- Automate deployment workflows through Vercel and Cloudflare integrations

- Access internal documentation and knowledge bases

- Connect to custom internal tools and APIs

MCP is still evolving, but we're committed to contributing to the standard and ensuring Augment Agent works seamlessly with the entire ecosystem of development tools.

Real-world testing in production codebases

We didn't build Augment Agent in isolation. Throughout development, we worked with teams managing everything from early-stage startups to enterprise monorepos with hundreds of thousands of files.

The feedback was consistent: context is everything. Teams reported that Augment Agent was the first AI tool that could actually understand their existing architecture well enough to suggest meaningful improvements and implement complex features that span multiple systems.

What's next for AI-powered development

Augment Agent represents our first step toward AI that truly understands software development as a collaborative, context-rich process. We're already working on capabilities that will further bridge the gap between AI assistance and human expertise.

The future of development isn't about replacing developers; it's about amplifying human creativity and problem-solving by removing the friction of context switching and repetitive implementation work.